Kalman Szenes

PhD student in Theoretical Chemistry at the Reiher group, ETH Zurich

Vim and C++ enthusiast. Interested in the development of new theories and algorithms to solve problems in quantum chemistry. Passionate about high-performance computing.

“There are two people who understand spin: Dirac, and God, and Dirac is dead.”

— Michael Atiyah

Latest Posts

| Feb 16, 2025 | Never Miss an Important Publication |

|---|---|

| Feb 01, 2025 | Ferdium: the One Chat Platform to Rule Them All |

| Dec 02, 2024 | Display Images, PDFs, and GIFs Directly in Your Terminal |

Selected Publications

-

Efficient Implementation of the Spin-Free Renormalized Internally-Contracted Multireference Coupled Cluster TheoryKalman Szenes, Riya Kayal, Kantharuban Sivalingam, Robin Feldmann, Frank Neese, and Markus ReiherarXiv, 2025

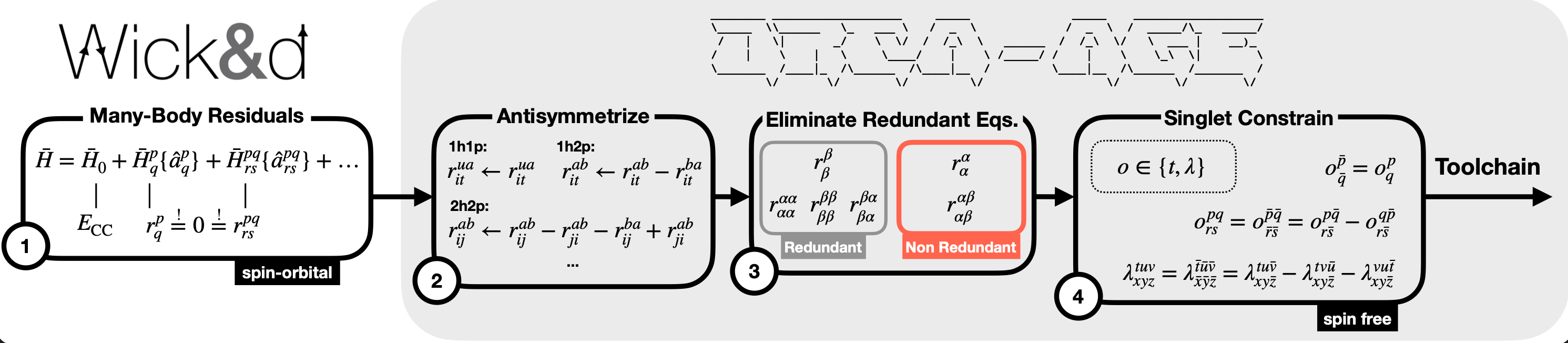

Efficient Implementation of the Spin-Free Renormalized Internally-Contracted Multireference Coupled Cluster TheoryKalman Szenes, Riya Kayal, Kantharuban Sivalingam, Robin Feldmann, Frank Neese, and Markus ReiherarXiv, 2025In this paper, an efficient implementation of the renormalized internally-contracted multreference coupled cluster with singles and doubles (RIC-MRCCSD) into the ORCA quantum chemistry program suite is reported. To this end, Evangelista’s Wick&d equation generator was combined with ORCA’s native AGE code generator in order to implement the many-body residuals required for the RIC-MRCCSD method. Substantial efficiency gains are realized by deriving a spin-free formulation instead of the previously reported spin-orbital version developed by some of us. Since AGE produces parallelized code, the resulting implementation can directly be run in parallel with substantial speedups when executed on multiple cores. In terms of runtime, the cost of RIC-MRCCSD is shown to be between single-reference RHF-CCSD and UHF-CCSD, even when active space spaces as large as CAS(14,14) are considered. This achievement is largely due to the fact that no reduced density matrices (RDM) or cumulants higher than three-body enter the formalism. The scalability of the method to large systems is furthermore demonstrated by computing the ground-state of a vitamin B12 model comprised of an active space of CAS(12, 12) and 809 orbitals. In terms of accuracy, RIC-MRCCSD is carefully compared to second- and approximate fourth-order \n\-electron valence state perturbation theories (NEVPT2, NEVPT4(SD)), to the multireference zeroth-order coupled-electron pair approximation (CEPA(0)), as well as to the IC-MRCCSD from Köhn. In contrast to RIC-MRCCSD, the IC-MRCCSD equations are entirely derived by AGE using the conventional projection-based approach, which, however, leads to much higher algorithmic complexity than the former as well as the necessity to calculate up to the five-body RDMs. Remaining challenges such as the variation of the results with the flow, a free parameter that enters the RIC-MRCCSD theory, are discussed.

@article{szenesEfficientImplementationSpinFree2025, author = {Szenes, Kalman and Kayal, Riya and Sivalingam, Kantharuban and Feldmann, Robin and Neese, Frank and Reiher, Markus}, title = {Efficient {{Implementation}} of the {{Spin-Free Renormalized Internally-Contracted Multireference Coupled Cluster Theory}}}, year = {2025}, journal = {arXiv}, number = {arXiv:2511.03567}, doi = {10.48550/arXiv.2511.03567}, } -

QCMaquis 4.0: Multipurpose Electronic, Vibrational, and Vibronic Structure and Dynamics Calculations with the Density Matrix Renormalization GroupKalman Szenes, Nina Glaser, Mihael Erakovic, Valentin Barandun, Maximilian Mörchen, Robin Feldmann, Stefano Battaglia, Alberto Baiardi, and Markus ReiherJ. Phys. Chem. A, 2025

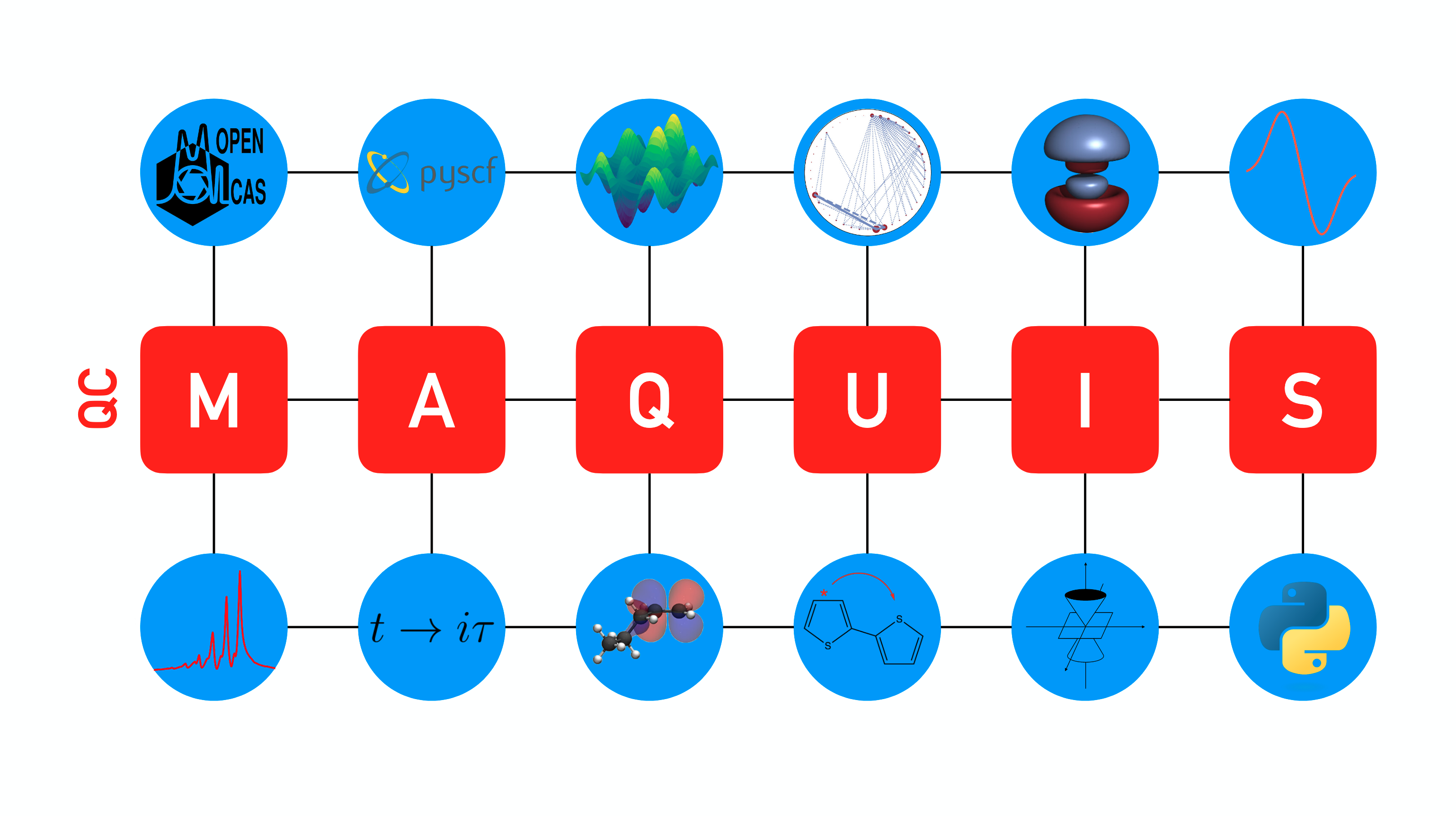

QCMaquis 4.0: Multipurpose Electronic, Vibrational, and Vibronic Structure and Dynamics Calculations with the Density Matrix Renormalization GroupKalman Szenes, Nina Glaser, Mihael Erakovic, Valentin Barandun, Maximilian Mörchen, Robin Feldmann, Stefano Battaglia, Alberto Baiardi, and Markus ReiherJ. Phys. Chem. A, 2025QCMaquis is a quantum chemistry software package for general molecular structure calculations in a matrix product state/matrix product operator formalism of the density matrix renormalization group (DMRG). It supports a wide range of features for electronic structure, multicomponent (pre-Born- Oppenheimer), anharmonic vibrational structure, and vibronic calculations. In addition to the ground and excited state solvers, QCMaquis allows for time propagation of matrix product states based on the tangent-space formulation of time-dependent DMRG. The latest developments include transcorrelated electronic structure calculations, very recent vibrational and vibronic models, and a convenient Python wrapper, facilitating the interface with external libraries. This paper reviews all the new features of QCMaquis and demonstrates them with new results.

@article{szenesQCMaquis40Multipurpose2025, title = {{{QCMaquis}} 4.0: {{Multipurpose Electronic}}, {{Vibrational}}, and {{Vibronic Structure}} and {{Dynamics Calculations}} with the {{Density Matrix Renormalization Group}}}, author = {Szenes, Kalman and Glaser, Nina and Erakovic, Mihael and Barandun, Valentin and M{\"o}rchen, Maximilian and Feldmann, Robin and Battaglia, Stefano and Baiardi, Alberto and Reiher, Markus}, year = {2025}, volume = {129}, number = {32}, pages = {7549--7574}, doi = {10.1021/acs.jpca.5c02970}, journal = {J. Phys. Chem. A}, } -

Striking the right balance of encoding electron correlation in the Hamiltonian and the wavefunction ansatzKalman Szenes, Maximilian Mörchen, Paul Fischill, and Markus ReiherFaraday Discussion on Correlated Electronic Structure, London, UK, 2024

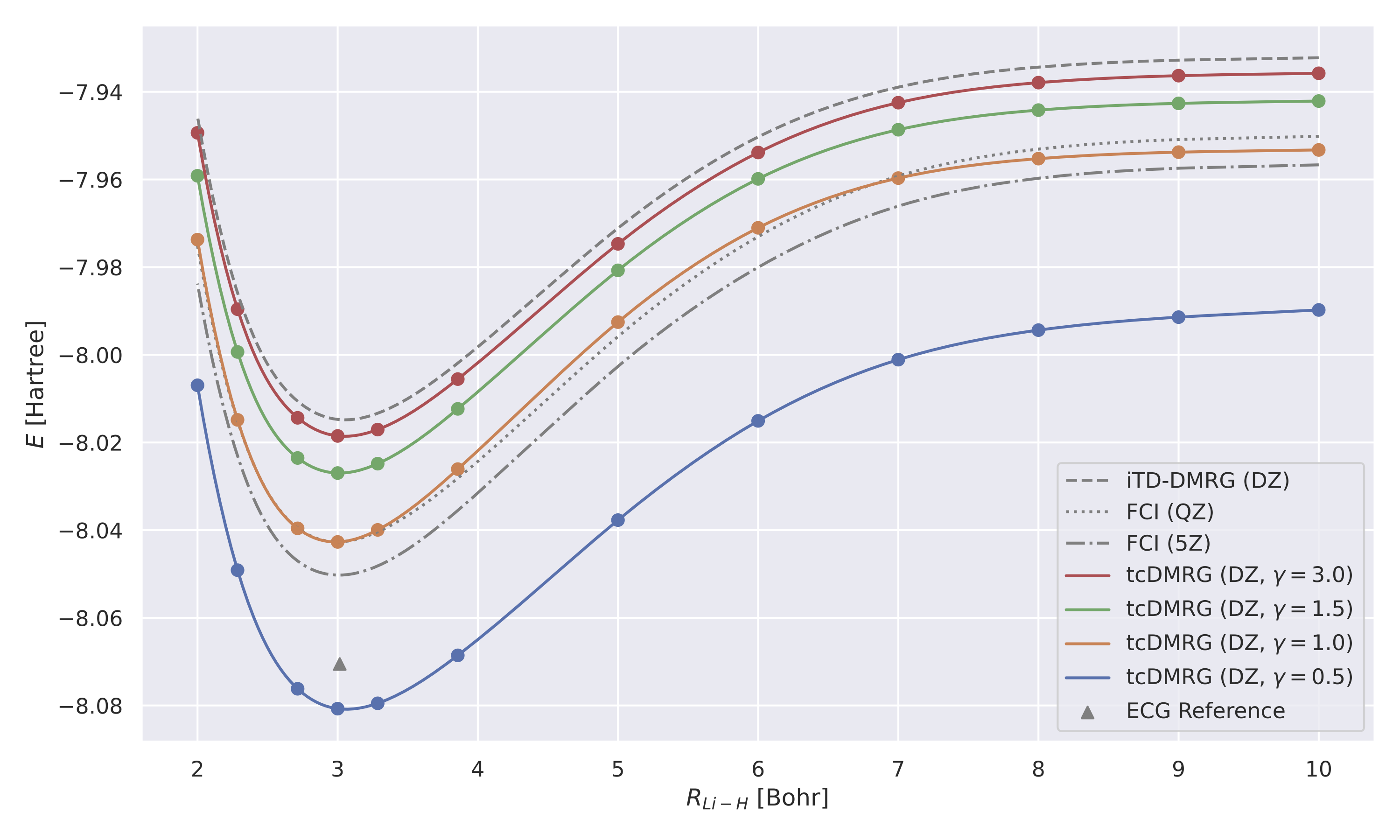

Striking the right balance of encoding electron correlation in the Hamiltonian and the wavefunction ansatzKalman Szenes, Maximilian Mörchen, Paul Fischill, and Markus ReiherFaraday Discussion on Correlated Electronic Structure, London, UK, 2024Multi-configurational electronic structure theory delivers the most versatile approximations to many-electron wavefunctions, flexible enough to deal with all sorts of transformations, ranging from electronic excitations, to open-shell molecules and chemical reactions. Multi-configurational models are therefore essential to establish universally applicable, predictive ab initio methods for chemistry. Here, we present a discussion of explicit correlation approaches which address the nagging problem of dealing with static and dynamic electron correlation in multi-configurational active-space approaches. We review the latest developments and then point to their key obstacles. Our discussion is supported by new data obtained with tensor network methods. We argue in favor of simple electron-only correlator expressions that may allow one to define transcorrelated models in which the correlator does not bear a dependence on molecular structure.

@article{Szenes2024, author = {Szenes, Kalman and M\"{o}rchen, Maximilian and Fischill, Paul and Reiher, Markus}, title = {{Striking the right balance of encoding electron correlation in the Hamiltonian and the wavefunction ansatz}}, journal = {Faraday Discussion on Correlated Electronic Structure}, year = {2024}, doi = {10.1039/D4FD00060A}, volume = {254}, number = {0}, pages = {359--381}, location = {{London, UK}}, } -

Domain-Specific Implementation of High-Order Discontinuous Galerkin Methods in Spherical GeometryKalman Szenes, Niccolò Discacciati, Luca Bonaventura, and William SawyerComputer Physics Communications, 2024

Domain-Specific Implementation of High-Order Discontinuous Galerkin Methods in Spherical GeometryKalman Szenes, Niccolò Discacciati, Luca Bonaventura, and William SawyerComputer Physics Communications, 2024In recent years, domain-specific languages (DSLs) have achieved significant success in large-scale efforts to reimplement existing meteorological models in a performance portable manner. The dynamical cores of these models are based on finite difference and finite volume schemes, and existing DSLs are generally limited to supporting only these numerical methods. In the meantime, there have been numerous attempts to use high-order Discontinuous Galerkin (DG) methods for atmospheric dynamics, which are currently largely unsupported in main-stream DSLs. In order to link these developments, we present two domain-specific languages which extend the existing GridTools (GT) ecosystem to high-order DG discretization. The first is a C++-based DSL called G4GT, which, despite being no longer supported, gave us the impetus to implement extensions to the subsequent Python-based production DSL called GT4Py to support the operations needed for DG solvers. As a proof of concept, the shallow water equations in spherical geometry are implemented in both DSLs, thus providing a blueprint for the application of domain-specific languages to the development of global atmospheric models. We believe this is the first GPU-capable DSL implementation of DG in spherical geometry. The results demonstrate that a DSL designed for finite difference/volume methods can be successfully extended to implement a DG solver, while preserving the performance-portability of the DSL.

@article{szenesDomainspecificImplementationHighorder2024, title = {Domain-Specific Implementation of High-Order {{Discontinuous Galerkin}} Methods in Spherical Geometry}, author = {Szenes, Kalman and Discacciati, Niccol\`{o} and Bonaventura, Luca and Sawyer, William}, date = {2024-02-01}, year = {2024}, journaltitle = {Computer Physics Communications}, journal = {Computer Physics Communications}, volume = {295}, pages = {108993}, issn = {0010-4655}, doi = {10.1016/j.cpc.2023.108993}, url = {https://www.sciencedirect.com/science/article/pii/S0010465523003387}, urldate = {2023-11-12}, keywords = {Discontinuous Galerkin methods,Domain-specific languages,GPU programming}, } - SC’23

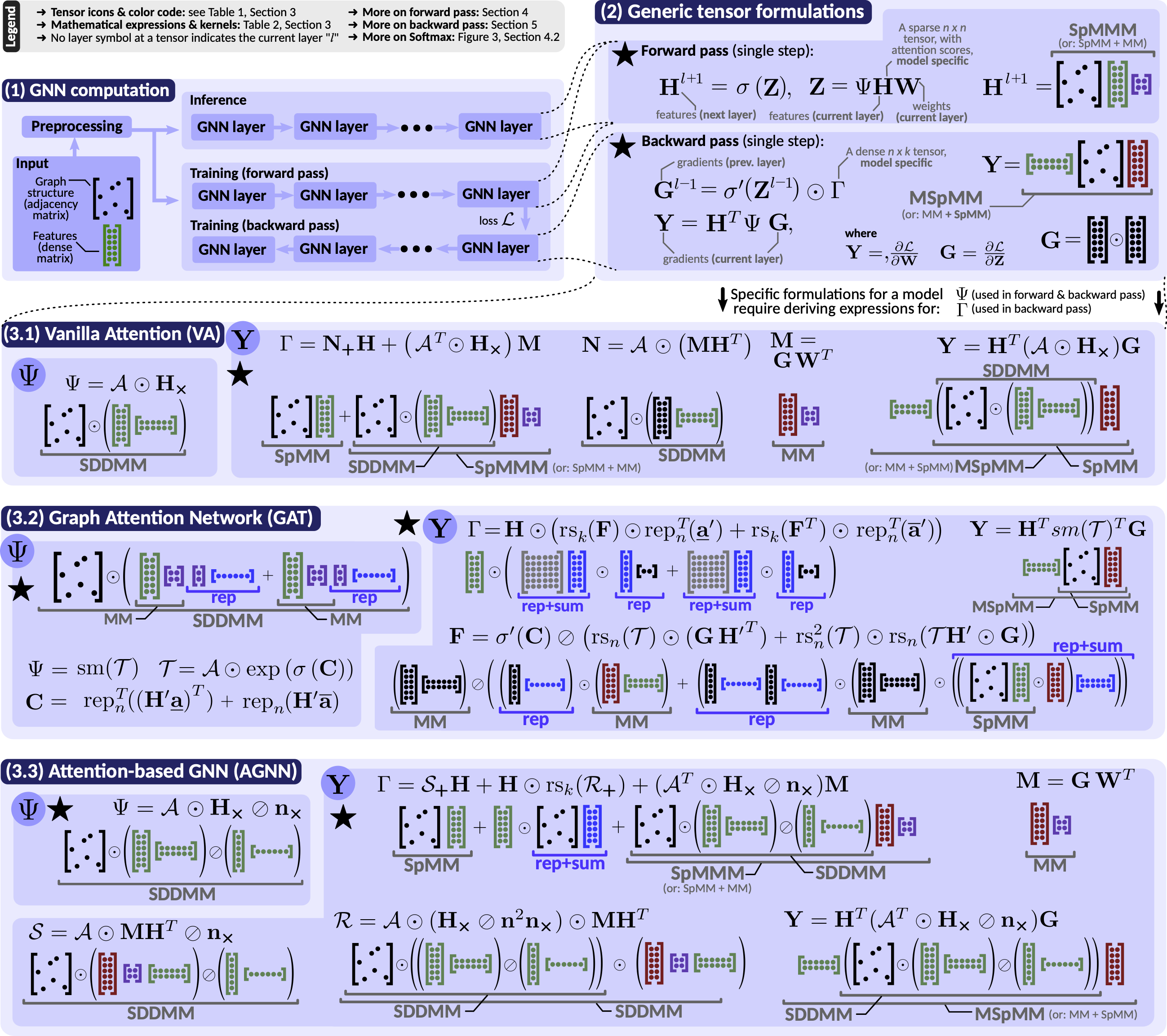

High-Performance and Programmable Attentional Graph Neural Networks with Global Tensor FormulationsMaciej Besta, Pawel Renc, Robert Gerstenberger, Paolo Sylos Labini, Alexandros Ziogas, Tiancheng Chen, Lukas Gianinazzi, Florian Scheidl, Kalman Szenes, Armon Carigiet, Patrick Iff, Grzegorz Kwasniewski, Raghavendra Kanakagiri, Chio Ge, Sammy Jaeger, Jarosław Wąs, Flavio Vella, and Torsten HoeflerSupercomputing, Denver, CO, USA, 2023

High-Performance and Programmable Attentional Graph Neural Networks with Global Tensor FormulationsMaciej Besta, Pawel Renc, Robert Gerstenberger, Paolo Sylos Labini, Alexandros Ziogas, Tiancheng Chen, Lukas Gianinazzi, Florian Scheidl, Kalman Szenes, Armon Carigiet, Patrick Iff, Grzegorz Kwasniewski, Raghavendra Kanakagiri, Chio Ge, Sammy Jaeger, Jarosław Wąs, Flavio Vella, and Torsten HoeflerSupercomputing, Denver, CO, USA, 2023Graph attention models (A-GNNs), a type of Graph Neural Networks (GNNs), have been shown to be more powerful than simpler convolutional GNNs (C-GNNs). However, A-GNNs are more complex to program and difficult to scale. To address this, we develop a novel mathematical formulation, based on tensors that group all the feature vectors, targeting both training and inference of A-GNNs. The formulation enables straightforward adoption of communication-minimizing routines, it fosters optimizations such as vectorization, and it enables seamless integration with established linear algebra DSLs or libraries such as GraphBLAS. Our implementation uses a data redistribution scheme explicitly developed for sparse-dense tensor operations used heavily in GNNs, and fusing optimizations that further minimize memory usage and communication cost. We ensure theoretical asymptotic reductions in communicated data compared to the established message-passing GNN paradigm. Finally, we provide excellent scalability and speedups of even 4–5x over modern libraries such as Deep Graph Library.

@article{bestaHighPerformanceProgrammableAttentional2023, title = {High-{{Performance}} and {{Programmable Attentional Graph Neural Networks}} with {{Global Tensor Formulations}}}, author = {Besta, Maciej and Renc, Pawel and Gerstenberger, Robert and Sylos Labini, Paolo and Ziogas, Alexandros and Chen, Tiancheng and Gianinazzi, Lukas and Scheidl, Florian and Szenes, Kalman and Carigiet, Armon and Iff, Patrick and Kwasniewski, Grzegorz and Kanakagiri, Raghavendra and Ge, Chio and Jaeger, Sammy and W\k{a}s, Jaros\l{}aw and Vella, Flavio and Hoefler, Torsten}, date = {2023-11-11}, year = {2023}, publisher = {{Association for Computing Machinery}}, location = {{Denver, CO, USA}}, doi = {10.1145/3581784.3607067}, url = {https://doi.org/10.1145/3581784.3607067}, urldate = {2023-11-04}, journal = {Supercomputing}, }